PySpark allows you to print a nicely formatted representation of your dataframe using the show() DataFrame method.

This is useful for debugging, understanding the structure of your dataframe and reporting summary statistics.

Unfortunately, the output of the show() method is ephemeral and cannot be stored in a variable for later use. For example, you might want to store this summary output and use it in a email that is sent to stakeholders when your pipeline has completed.

It is surprising that there is still no way to store the string representation of a dataframe natively using PySpark.

But there is a workaround that makes it is possible if you have a quick look at the PySpark source code.

A naive approach

So why can’t you just save the output of df.show() to a variable?

Let’s see what happens using a naive approach and just assign the show() output to a variable.

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

# create an example dataframe

columns = ["county", "revenue"]

data = [("uk", 1000), ("us", 2000), ("germany", 3000), ("france", 4000)]

df = spark.createDataFrame(data, columns)

# 'save' the output to a variable (doesn't work)

summary_string = df.show()

# print the variable value

print(summary_string)

# check the variable type

print(type(summary_string))

When we run the code above, we get the following output…

+------+-------+

|county|revenue|

+------+-------+

| uk| 1000|

| us| 2000|

| de| 3000|

| fr| 4000|

+------+-------+

None

<class 'NoneType'>

You might think, great that worked! The dataframe summary correctly printed to the terminal.

But actually, the summary_string variable is None.

The dataframe string representation was printed to the terminal when df.show() was called but did not get assigned to the summary_string variable.

So what’s going on?

“Software is not magic!”

This is a quote that I read in a blog a while ago (sorry I cannot remember the original source) and it has suck with me since. If something is weird, just look at the underlying source code. It isn’t magic. There will be a logical reason for the behaviour.

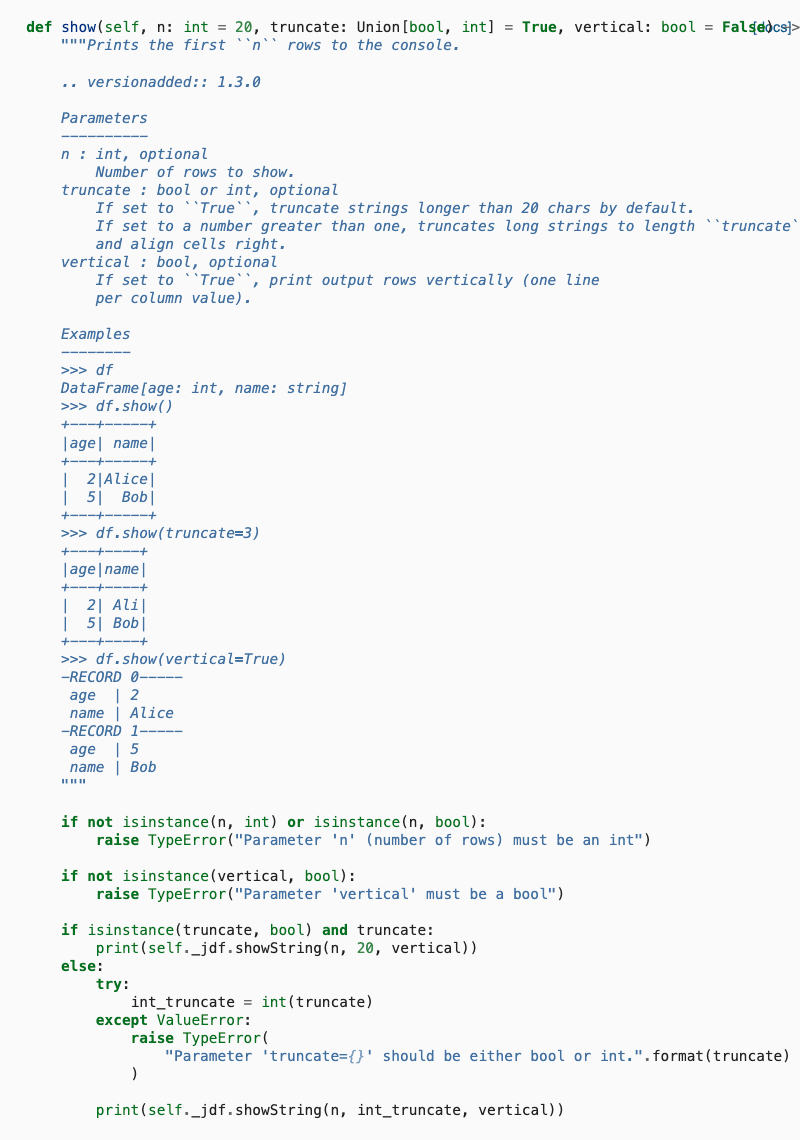

Let’s look at the PySpark source code to see what is going on…

There are two things to notice:

- The

showmethod doesn’t return anything - Under the hood the method is calling a java function self._jdf.showString()

As the method only ‘prints’, it is now unsurprising that nothing is returned by the function.

We can see that the show method is calling some kind of internal JavaObject

which creates and prints the string representation of the dataframe.

Let’s try and use this self._jdf.showString() method for our own purposes.

The workaround

It can be difficult to follow source code for the Java internals of PySpark.

I couldn’t find the underlying source code for the self._jdf.showString() method, but we can see it takes three arguments.

These are the same as the original df.show() so we can look at the docstring in the df.show() source code to give us some information:

n : int

Number of rows to show.

truncate : int

Number of characters in a string before truncating

vertical : bool

If set to `True`, print output rows vertically (one line per column value)

If we set the same default values used by PySpark, we can save our dataframe string representation to a variable as follows:

# save summary string to a variable using self._jdf.showString

summary_string = df._jdf.showString(20, 20, False)

print(summary_string)

print(type(summary_string))

+-------+-------+

| county|revenue|

+-------+-------+

| uk| 1000|

| us| 2000|

|germany| 3000|

| france| 4000|

+-------+-------+

<class 'str'>

We can see that the summary_string variable is now of type ‘string’. We have successfully saved the string representation to a variable which we can use later in our program.

Notes

As we are now working with an internal java object the behaviour of the function is a little bit strange.

I came across a few weird issues when trying this for the first time. The following will all raise an error:

- You cannot use named arguments (e.g. specifying n=, truncate=, vertical= etc.)

- You must specify a value for all three arguments, there are no automatic defaults

- You must specify

truncateas an integer. In the Pyspark df.show() you can use a boolean value, however, you cannot pass a boolean value directly to theself._jdf_showString()method.

To alleviate some of these cryptic issues and help colleagues use the functionality, I recommend wrapping self._jdf.showString in your own function with default values (like what is done in PySpark itself) to make it more useable.

def get_df_string_repr(df, n=20, truncate=True, vertical=False):

"""Example wrapper function for interacting with self._jdf.showString"""

if isinstance(truncate, bool) and truncate:

return df._jdf.showString(n, 20, vertical)

else:

return df._jdf.showString(n, int(truncate), vertical)

Code from this post is available in the e4ds-snippets GitHub repository

Happy coding!

Resources

Further Reading

- Unit Testing PySpark Code

- Top tips for using PyTest

- Google Search Console API with Python

- What I learned optimising someone else’s code

- Deploying Dremio on Google Cloud

- Gitmoji: Add Emojis to Your Git Commit Messages!

- Five Tips to Elevate the Readability of your Python Code

- Do Programmers Need to be able to Type Fast?

- How to Manage Multiple Git Accounts on the Same Machine