Unit-testing is a really important skill for software development. There are some great Python libraries to help us write and run unit-test such as Nose and Unittest . But my favourite is PyTest .

I recently read through PyTest’s documentation in more detail to learn about its features in more depth.

Here is a list of some more obscure features that I found useful and will start integrating into my own testing workflow. I hope there is something new in this list you didn’t already know…

💻 All code snippets in this post are available in the e4ds-snippets GitHub repository .

General tips for writing tests 👨🎓

1. How to write a good unit-test

Ok, so this one isn’t specific to the PyTest library, but the first tip is to read PyTest's documentation on structuring your unit tests. It is well worth a quick read.

A good test should should verify a certain expected behaviour and be able to run independently of other code. i.e. Within the test should be all the code required to setup and run the behaviour to be tested.

This can be summarised into four stages:

- Arrange – Perform the setup required for the test. E.g. defining inputs

- Act – run the function you want to test

- Assert – verify the output of the function is as expected

- Cleanup – (Optional) clean up any artefacts generated from the test. E.g. output files.

For example:

def sum_list(my_list):

return sum(my_list)

def test_sum_list():

# arrange

test_list = [1, 2, 3]

# act

answer = sum_list(test_list)

# Assert

assert answer == 6

Although this is a trivial example, having a common structure to all your tests helps improve readability.

https://docs.pytest.org/en/7.1.x/explanation/anatomy.html

2. Testing Exceptions

Normally, the first thing we think of testing is the expected output of the function when it runs successfully.

However, it is important to also verify the behaviour of the function when it raises an exception. Particularly if you know which type of inputs should raise certain exceptions.

You can test for exceptions

using the pytest.raises context manager.

For example:

import pytest

def divide(a, b):

"""Divide to numbers"""

return a/b

def test_zero_division():

with pytest.raises(ZeroDivisionError):

divide(1,0)

def test_type_error():

with pytest.raises(TypeError):

divide("abc",10)

https://docs.pytest.org/en/7.1.x/how-to/assert.html#assertions-about-expected-exceptions

3. Test logging/printing

PyTest allows you to test the printing and logging statements in your code.

There are two inbuilt PyTest fixtures, capsys and caplog , which can be used to track information printed to the terminal by the function.

Test printing outputs

def printing_func(name):

print(f"Hello {name}")

def test_printing_func(capsys):

printing_func(name="John")

# use the capsys fixture to record terminal output

output = capsys.readouterr()

assert output.out == "Hello John\n"

https://docs.pytest.org/en/6.2.x/reference.html#std-fixture-capsys

Test logging outputs

import logging

def logging_func():

logging.info("Running important function")

# some more code...

logging.info("Function completed")

def test_logging_func(caplog):

# use the caplog fixture to record logging records

caplog.set_level(logging.INFO)

logging_func()

records = caplog.records

# first message

assert records[0].levelname == 'INFO'

assert records[0].message == "Running important function"

# second message

assert records[1].levelname == 'INFO'

assert records[1].message == "Function completed"

https://docs.pytest.org/en/6.2.x/reference.html#std-fixture-caplog

4. Testing floats

Arithmetic involving floats can cause problems in Python .

For example, this simple function causes a curious error:

def subtract_floats(a,b):

return a - b

def test_substract_floats():

assert subtract_floats(1.2, 1.0) == 0.2

The expected output should be 0.2 but Python returns 0.19999999999999996.

There is nothing wrong with the function’s logic and it shouldn’t fail this test case.

To combat floating point rounding errors in tests you can use the approx function

import pytest

def test_substract_floats():

assert subtract_floats(1.2, 1.0) == pytest.approx(0.2)

The test now passes.

Note, you can also apply the approx function to numpy arrays. This is useful when comparing arrays and dataframes.

import pytest

import numpy as np

np.array([0.1, 0.2]) + np.array([0.2, 0.4]) == pytest.approx(np.array([0.3, 0.6]))

https://docs.pytest.org/en/7.1.x/reference/reference.html#pytest-approx

Tips that save you time ⏳

5. Save time by only run certain tests

Running tests should aid your workflow and not be a barrier. Long running test suites can slow you down and put you off running tests regularly.

Normally, you don’t need to run the entire test suite every time you make a change, particularly if you are only working on a small part of the codebase.

So it can be handy to be able to run a subset of tests relevant to the code you are working on.

PyTest comes with a few options for selecting which tests to run:

Using the the -k flag

You can use the -k flag when running PyTest to only run tests which match a given substring.

For example if you had the following tests:

def test_preprocess_categorical_columns():

...

def test_preprocess_numerical_columns():

...

def test_preprocess_text():

...

def test_train_model():

...

You could use the following command to only run the first test which contains the substring ‘categorical’:

# run first test only

pytest -k categorical

You could run the following command to only run the tests which contain the name ‘preprocess’ (the first three tests):

# run first three tests only

pytest -k preprocess

Logical expressions are also allowed. For example, the following will run the tests which contain ‘preprocess’ but exclude the one that contains ‘text’. This would run the first two tests but not the third:

# run first two tests only

pytest -k "preprocess and not text"

A full explanation of valid phrases for the -k flag is provided in the command line flags documentation: https://docs.pytest.org/en/7.2.x/reference/reference.html#command-line-flags

Running tests in a single test file

If your tests are split across multiple files, you can run the tests from a single file by explicitly passing the file name when running PyTest:

# only run tests defined in 'tests/test_file1.py' file

pytest tests/test_file1.py

Using markers

You can also use pytest ‘markers’ to mark certain tests. This can be useful for marking ‘slow’ tests which you can then exclude with the -m flag.

For example.

import time

import pytest

def my_slow_func():

# some long running code...

time.sleep(5)

return True

@pytest.mark.slow

def test_my_slow_func():

assert my_slow_func()

The my_slow_func will take longer to run than the other tests.

After using the @pytest.mark.slow decorator, we can exclude running this test every time using the -m flag:

# exclude running tests marked as slow

pytest -m "not slow"

Markers can also be handy if you need to skip tests in certain circumstances. For example, if your CI build runs the tests using multiple versions of Python, and you know a certain test will fail with a certain version of Python.

import sys

@pytest.mark.skipif(sys.version_info < (3, 10), reason="requires python3.10 or higher")

def test_function():

...

https://docs.pytest.org/en/7.1.x/example/markers.html

https://docs.pytest.org/en/7.1.x/how-to/skipping.html

6. Only re-running failed tests

When you do run the entire test suite, you might find a small number of tests failed.

Once you have debugged the issue and updated the code, rather than running the entire test suite again, you can use the --lf flag to only run the tests which failed on the last run.

You can verify the updated code passes these tests before running the entire test suite again.

# only run tests which failed on last run

pytest --lf

Alternatively, you can still run the entire test suite but start with the tests that failed last time using the --ff flag.

# run all tests but run failed tests first

pytest --ff

https://docs.pytest.org/en/7.1.x/how-to/cache.html#rerunning-only-failures-or-failures-first

Tips that save you writing code 🚀

7. Paramtarising tests

When you want to test multiple different inputs for a specific function, it is common for people to write multiple assert statements within the test function. For example:

def remove_special_characters(input_string):

return re.sub(r"[^A-Za-z0-9]+", "", input_string)

def test_remove_special_characters():

assert remove_special_characters("hi*?.") == "hi"

assert remove_special_characters("f*()oo") == "foo"

assert remove_special_characters("1234bar") == "bar"

assert remove_special_characters("") == ""

There is a better way to do this in PyTest using ‘parameterized tests’:

import pytest

@pytest.mark.parametrize(

"input_string,expected",

[

("hi*?.", "hi"),

("f*()oo", "foo"),

("1234bar", "1234bar"),

("", ""),

],

)

def test_remove_special_characters(input_string, expected):

assert remove_special_characters(input_string) == expected

This has the benefit of reducing duplicated code. Additionally, PyTest runs an individual test for each parameterized input. Therefore, if one of the tests fail it will be easier to identify. Whereas, with the original implementation of using multiple assert statements, PyTest runs it as a single test. If any of the assert statements fail the whole test will ‘fail’.

8. Run tests from docstrings

Another cool trick is defining and running tests directly from docstrings.

You can define the test case in the doc string as follows:

def add(a, b):

"""Add two numbers

>>> add(2,2)

4

"""

return a + b

Then you can include the docstring tests to your test suite by adding the --doctest-modules flag when running the pytest command.

pytest --doctest-modules

Defining tests in docstrings can be very helpful for other developers using your code as it explicitly shows the expected inputs and outputs of your function right in the function definition.

I find this works well for for functions with ‘simple’ data structures as inputs and outputs. Rather, than writing full blown tests which add more code to the test suite.

https://docs.pytest.org/en/7.1.x/how-to/doctest.html#how-to-run-doctests

9. Inbuilt pytest fixtures

PyTest includes a number of inbuilt fixtures that can be very useful.

We briefly covered a couple of these fixtures in tip number 3 – capsys and caplog – but a full list can be found here: https://docs.pytest.org/en/stable/reference/fixtures.html#built-in-fixtures

These fixtures can be accessed by your test through simply adding them as an argument to the test function.

Two of the most useful inbuilt fixtures in my opinion are the request fixture and the tmp_path_factory fixture.

You can check out my article on using the request fixture to use fixtures in parameterized tests here

.

The tmp_path_factory fixture can be used to create temporary directories for running tests. For example, if you are testing a function which needs to save a file to a certain directory.

https://docs.pytest.org/en/stable/reference/fixtures.html#built-in-fixtures

https://docs.pytest.org/en/7.1.x/how-to/tmp_path.html#the-tmp-path-factory-fixture

Tips to help with debugging

10. Increase the verbosity of tests

The default output of PyTest can be quite minimal. If your tests are failing, it can be helpful to increase the amount of information provided in the terminal output.

This can be added by using the verbose flag, -vv

# increase the amount of information provided by PyTest in the terminal output

pytest -vv

https://docs.pytest.org/en/7.2.x/reference/reference.html#command-line-flags

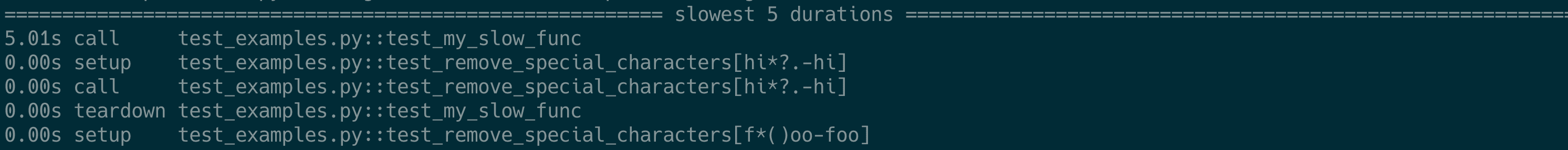

11. Show duration of tests

If your test suite takes a long time to run, you might want to understand which tests are taking the longest to run. You can then try and optimise these tests or use markers to exclude them as demonstrated above.

You can find out which tests take the longest time to run using the --durations flag.

You also need to pass the verbosity flag to show the full durations report.

# show top 5 longest running tests

pytest --durations=5 -vv

https://docs.pytest.org/en/7.2.x/reference/reference.html#command-line-flags

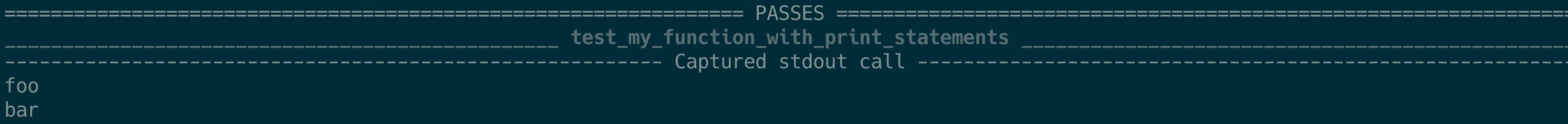

12. Show the output of print statements in your code

Sometimes you will have print statements in your source code to help with debugging your functions.

By default, Pytest will not show the output of these print statements if the test passes.

You can override this behaviour by using the -rP flag.

def my_function_with_print_statements():

print("foo")

print("bar")

return True

def test_my_function_with_print_statements():

assert my_function_with_print_statements()

# run tests but show all printed output of passing tests

pytest -rP

https://docs.pytest.org/en/7.2.x/reference/reference.html#command-line-flags

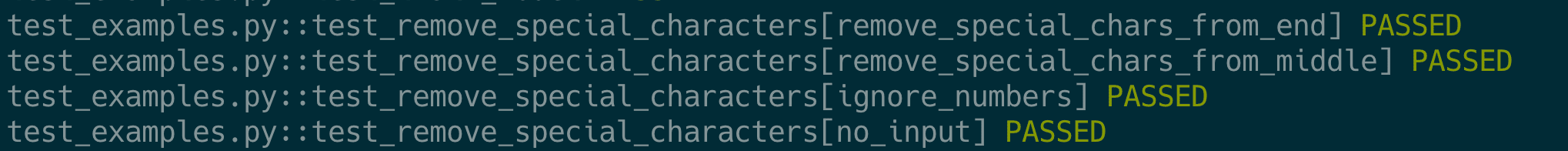

13. Assign Ids to parametrized tests

One potential issue with running parametrized tests is that they all appear with the same name in the terminal output. Even though they are technically testing for different behaviours.

You can add IDs to your parametrized tests to give each parameterized test a unique name which helps identify it. It also increases the readability of your tests as you can be explicit about what you are trying to test for.

There are two options here for adding IDs to your tests:

Option 1: The id argument

Reusing the parameterized example from tip number 7:

@pytest.mark.parametrize(

"input_string,expected",

[

("hi*?.", "hi"),

("f*()oo", "foo"),

("1234bar", "1234bar"),

("", ""),

],

ids=[

"remove_special_chars_from_end",

"remove_special_chars_from_middle",

"ignore_numbers",

"no_input",

],

)

def test_remove_special_characters(input_string, expected):

assert remove_special_characters(input_string) == expected

Option 2: Using pytest.param

Alternatively using the pytest.param wrapper:

@pytest.mark.parametrize(

"input_string,expected",

[

pytest.param("hi*?.", "hi", id="remove_special_chars_from_end"),

pytest.param("f*()oo", "foo", id="remove_special_chars_from_middle"),

pytest.param("1234bar", "1234bar", id="ignore_numbers"),

pytest.param("", "", id="no_input"),

],

)

def test_remove_special_characters(input_string, expected):

assert remove_special_characters(input_string) == expected

Generally I prefer using option 1 as I think it is neater. But if you are running a lot of parameterized inputs that cover many lines, it might be more readable to use option 2.

https://docs.pytest.org/en/stable/example/parametrize.html#different-options-for-test-ids

Conclusion

PyTest is a great testing framework with many useful features. The documentation is generally very good and I highly recommend browsing through for more information and other great features.

I hope you learned something new – I would be interested to know what other tips you have for using PyTest.

Happy testing!

Further Reading

- Google Search Console API with Python

- What I learned optimising someone else’s code

- Deploying Dremio on Google Cloud

- Gitmoji: Add Emojis to Your Git Commit Messages!

- Five Tips to Elevate the Readability of your Python Code

- Do Programmers Need to be able to Type Fast?

- How to Manage Multiple Git Accounts on the Same Machine