Python’s logging library is very powerful but generally under-utilised in data science projects.

Most developers default to using standard print statements to track important events in their applications or data pipelines.

It makes sense. ‘Print’ does the job, it’s easy to implement, requires no boiler plate code and no external libraries to understand.

But as the code base gets larger and when you want to move the code into ‘production’, you can quickly run into some issues due to the inflexibility of print statements and miss out on some great features from Python’s logging library that can help with debugging.

In principle it is simple to use the logging library. Particularly for single scripts.

But in my experience, I found it difficult to clearly understand how to set up logging for more complex applications with multiple modules and files.

Admittedly, this might just be due to my chronic inability to read documentation before starting to use a library. But maybe you have had the same challenges which is why you probably clicked on this article.

It turns out, there is a simple way to set up logging for complex projects without lots of boiler plate code in each file. But I couldn’t find a single source that distilled the information in the context of data science projects. Hopefully, this post can provide just that.

Here is how I set up logging for my data science projects with minimal boiler plate code and a simple configuration file.

Why use logging instead of printing?

First of all, let’s discuss the argument for using Python’s logging library in your projects.

Logging is primarily for your benefit as the developer

Print/logging statements are for the developer’s benefit. Not the computer’s.

Logging statements help diagnose and audit events and issues related to the proper functioning of the application.

The easier it is for you to include/exclude relevant information in your log statements, the more efficient you can be in monitoring your application.

Print statements are inflexible

They can’t be ‘turned off’. If you want to stop printing a statement you have to change the source code to delete or comment out the line.

In a large code base, it can be easy to forget to remove all the random print statements you used for debugging.

Logging allows you to add context

Python’s logging library allows you to easily add metadata to your logs, such as timestamp, module location and severity level (DEBUG, INFO, ERROR etc.). This metadata is automatically added without having to hard code it into your statement.

The metadata is also structured to provide consistency throughout your project, which can make the logs much easier to read when debugging.

Send logs to different places and formats

Print statements send the output to the terminal. When you close your terminal session the print statements are lost forever.

The logging library allows you to save logs in different formats including to a file. Useful for recording the logs for future analyses.

You can also send the logs to multiple locations at the same time. This might be useful if you need logging for multiple use cases. For example, general debugging from the terminal output as well as recording of critical log events in a file for auditing purposes.

Control behaviour via configuration

Logging can be controlled using a configuration file. Having a configuration file ensures consistency across the project and separation of config from code.

This also allows you to easily maintain different configurations depending on the environment (e.g. dev vs production) without needing to change any of the source code.

Logging 101

Before working through an example, there are three key concepts from the logging module to explain: loggers, formatters and handlers.

Logger

The object used to generate the logs is instantiated via:

import logging

logger = logging.getLogger(__name__)

The ‘logger’ object creates and controls logging statements in the project.

You can name the logger anything you want, but it is a good practice to instantiate a new logger for each module and use __name__ for the logger’s name (as demonstrated above).

This means that logger names track the package/module hierarchy, which helps developers quickly find where in the codebase the log was generated.

Formatters

Formatter objects determine the order, structure, and contents of the log message.

Every time you call the logger object, a LogRecord is generated. A LogRecord object contains a number of attributes

including when it was created, the module where it was created and the message itself.

We can define which attributes to include in the final log statement output and any formatting using the Formatter object.

For example:

# formatter definition

'%(asctime)s - %(name)s - %(levelname)s - %(message)s'

# example log output

2022-09-25 14:10:55,922 - INFO - __main__ - Program Started

Handlers

Handlers are responsible for sending the logs to different destinations.

Log messages can be sent to multiple locations. For example to stdout (e.g the terminal) and to a file.

The most common handlers are StreamHandler, which sends log messages to the terminal, and FileHandler which sends messages to a file.

The logging library also comes with a number of powerful handlers

. For example the RotatingFileHandler and TimedFileHandler save logs to files and automatically rotate which file the logs are to when the file reaches a certain size or time limit.

You can also define your own custom handlers if required.

Key Takeaways

- Loggers are instantiated using

logging.getLogger() - Use

__name__to automatically name your loggers - A logger needs a ‘formatter’ and ‘handler’ to specify the format and location of the log messages

- If a handler is not defined, you will not see any log message outputs

Common Python Project Structure

# common project layout

├── data <- directory for storing local data

├── config <- directory for storing configs

├── logs <- directory for storing logs

├── requirements.txt

├── setup.py

└── src

├── main.py <- main script

├── data_processing <- module for data processing

│ ├── __init__.py

│ └── processor.py

└── model_training <- module for model_training

├── __init__.py

└── trainer.py

The above shows a typical project layout for a data science project.

💻 The example project layout and code is available in the e4ds-snippets GitHub repo

We have a src directory with the source code for the application. As well as directories for storing data and configurations separately from the code.

We will use this as an example project for setting up logging.

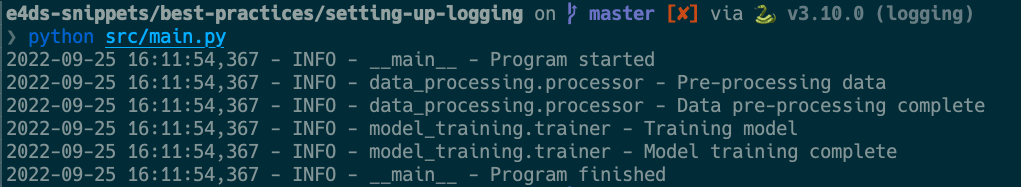

The main entry point for the program is in the src/main.py file. The main program calls code from the src/data_processing and src/model_training modules in order to preprocess the data and train the model. We will use log messages from the relevant modules to record the progress of the pipeline.

You can set up logging by either writing Python code to define your loggers or use a configuration file.

Let’s work through an example for both approaches.

Basic logging setup

We can set up a logger for the project that simply prints the log messages to the terminal. This is similar to how print statements work, however, we will enrich the messages with information from the LogRecord attributes.

We initiate the logger and define the handler (StreamHandler) and format of the messages in the main.py file. We only have to do this once in the main file and the settings are propagated throughout the project.

In each module that we want to use logging, we only need to import the logging library and instantiate the logger object at the top of each file.

That’s it.

Using a configuration file

The four or five lines of code at the top of the main.py file can be replaced using a configuration file.

My preferred approach for larger projects is to use configuration files:

- able to define and use different logging configurations for development and production environments

- separates configuration from code, making it easier to reuse the source code elsewhere with different logging requirements

- easily add multiple loggers and formatters to the project without significantly adding more lines in the source code

We can change the code in the main.py file to load from a configuration file using logging.config.fileConfig.

I have created a function (setup_logging) which loads a configuration file depending on the value of an environment variable (e.g. dev or prod). This allows you to easily use a different configuration in development vs production without having to change any source code.

💻 The example project code is available in the e4ds-snippets GitHub repo

Example configuration file

In the configuration file we have defined two loggers. One which sends logs to the terminal and one which sends the logs to a file.

More information about the logging configuration file format can be found in the logging documentation

Debugging tips

I had quite a few issues trying to set up logging in my projects initially where I did not see any of my logs in the terminal or file outputs.

Here are a couple of tips

Ensure you have specified all your handlers in the config

If there is a misspecified handler in your configuration file you might not see any logs printed in the terminal (or other destinations). Unfortunately, the logging library seems to fail silently and doesn’t give many indicators as to why your logging setup isn’t working as expected.

Ensure you have set the correct ‘level’ setting

For example: logger.setLevel(logging.DEBUG). The default level is logging.WARNING which means only WARNING, ERROR and CRITICAL messages will be recorded. If your log messages use INFO or DEBUG you need to set the level explicitly or your messages will not show.

Don’t get confused between ‘logging’ and ‘logger’

I’m embarrassed to admit it, but I have spent a long time in the past trying to work out why messages weren’t showing. It turns out I was using logging.info() instead of logger.info(). I thought I would include it here in case it isn’t just me who has typos. Worth checking. 🤦♂️

Resources

- https://www.loggly.com/blog/4-reasons-a-python-logging-library-is-much-better-than-putting-print-statements-everywhere/

- https://towardsdatascience.com/8-advanced-python-logging-features-that-you-shouldnt-miss-a68a5ef1b62d

- https://docs.python-guide.org/writing/logging/

Further Reading

- Reproducible ML: Maybe you shouldn't be using Sklearn's train_test_split

- Why is machine learning deployment so difficult in large companies

- How to set up an amazing terminal for data science with oh-my-zsh plugins

- Data Science Setup on MacOS (Homebrew, pyenv, VSCode, Docker)

- Five Tips to Elevate the Readability of your Python Code

- SQL-like Window Functions in Pandas

- Do Programmers Need to be able to Type Fast?

- How to Manage Multiple Git Accounts on the Same Machine